Truth today is weighted by probability.

It affects how we interpret the world, and how we sound when we try to describe it.

A new sound of language

Something has happened to the way language sounds.

Many voices in our digital environments share the same rhythm, the same tone, the same narrative curve.

I scroll through feeds where every post feels familiar before I finish reading it. It is well-written, coherent, and often insightful, yet without a pulse of its own. It sounds relevant.

What we know deeply is often what language models fail to reproduce.

What we know less about is where they excel. It sounds good, confirming, and persuasive.

The evolution of language models is fascinating in itself. Since the launch of systems like ChatGPT, the persuasive aspect has intensified along with confirmation bias.

Perhaps because humans are drawn to reassurance, but also because stakeholders demand engagement. The model must not only answer. It must retain us.

The more people rely on their language model, the stronger the need for it to appear trustworthy, friendly, and competent.

And perhaps that is where something new is taking shape: between our cognitive inclination to seek confirmation and the model’s need for retention.

New terms have entered our vocabulary: synthetic safety, AI psychosis, cognitive endurance.

They describe phenomena that are no longer theoretical but deeply embedded in our digital environments.

Cognitive integrity has become essential.

It is about awareness, about being present to how our thoughts are shaped.

Research in psychological defense and information resilience now touches on this same foundation: the ability to think freely within structures that constantly compete for our attention.

Cognitive integrity is about understanding how our thoughts are shaped by systems that reward reaction over reflection.

To protect it is to protect our ability to interpret, question, and connect before someone else does it for us.

AI and the language that changes

AI has given more people the ability to write, and that is a positive development. Many who once struggled to find words now have a way to express what they think.

But this raises a deeper question: how well do we understand the subjects we explore?

The word explore might sound like something a model would generate, but for me, language has always been personal.

I did not speak Swedish as a child, even though I was born and raised here. My home language set me apart, and perhaps that is why I wanted to master Swedish so fully. Language became something I could paint with.

Maybe that is also why I notice the subtleties that fade away.

It is the negations that create drama, the punctuation that replaces pause, the phrasing that repeats from post to post.

For those who work with language, a new question arises:

Will my writing be mistaken for that of a model?

We are creating a linguistic monoculture.

Nuance is disappearing, and fast.

To understand why, we need to look at how language is actually generated in large language models.

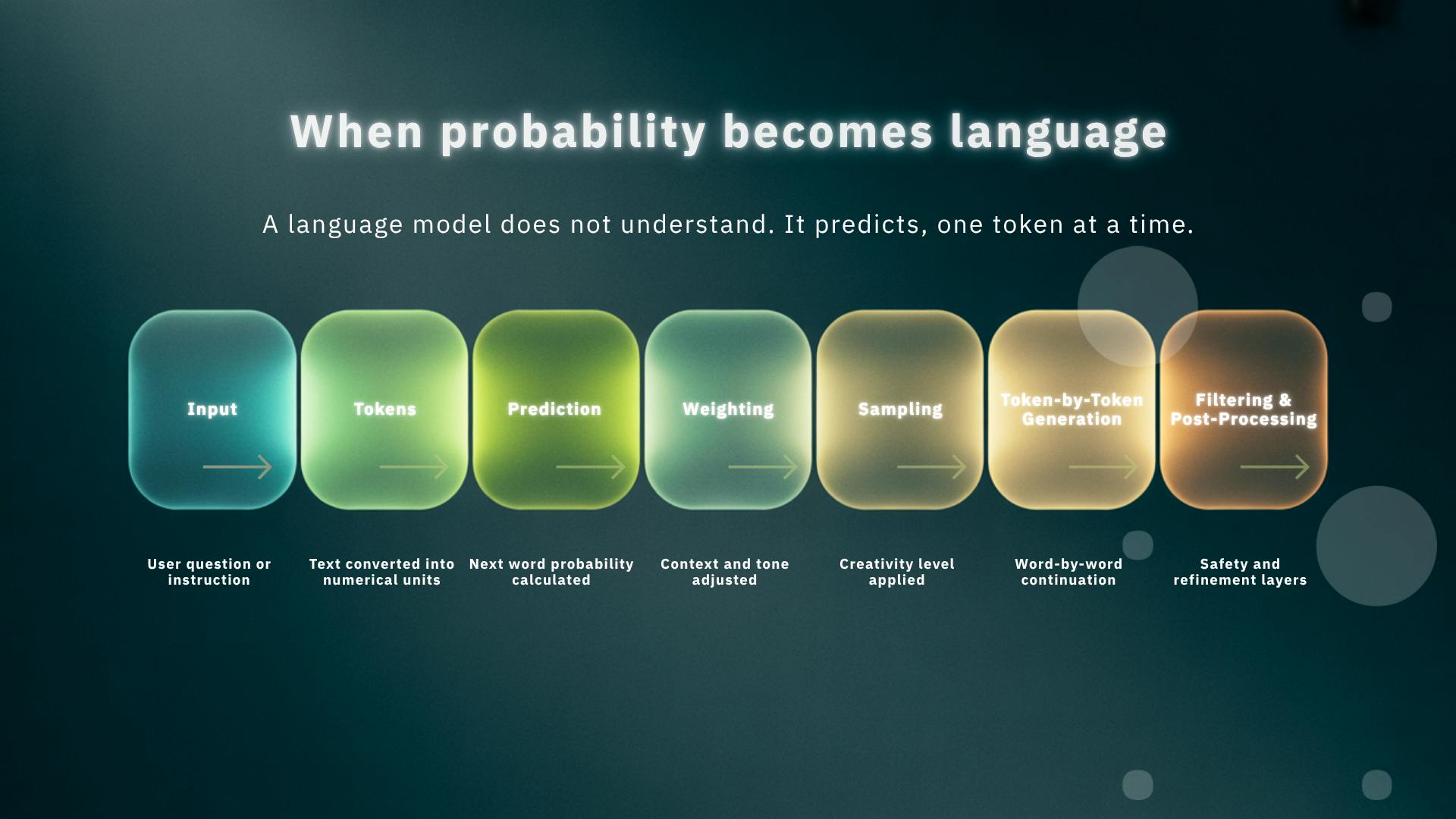

How language is generated in large models

Language models build language through probability rather than understanding. Every sentence is the outcome of a long chain of micro-decisions.

A simplified explanation:

-

Input (prompt):

You write a question, instruction, or sentence. The model converts it into numerical representations of words (tokens). -

Prediction:

It calculates which word is most likely to follow based on patterns in the hundreds of billions of words it was trained on. -

Weighting:

The probability is adjusted according to context. The model considers previous words to determine tone, relation, and coherence. -

Sampling (temperature):

A setting determines how creative or predictable the model will be. A low temperature produces accurate and consistent results; a higher one creates variation but increases the chance of error. -

Token-by-token generation:

Each word is created separately. The model guesses one, adds it, and then uses the new context to predict the next. -

Style and probability:

Since the model is trained on vast amounts of text from articles, platforms, and forums, it often reproduces what occurs most frequently. The result is a probable language: familiar and smooth but lacking human texture. -

Filtering and post-processing:

The text passes through safety layers that remove or modify inappropriate content, which further smooths tone and rhythm.

The outcome is a language that feels balanced and recognizable, but where the human texture born of hesitation, experience, and evaluation has been polished away.

For those who wish to dive deeper, a more detailed version of this list is included at the end of the article.

From logic to probability

When language models weigh words by probability, something fundamental changes: our sense of logic.

What used to be cause and effect becomes likelihood and relevance.

The model does not need to understand. It only needs to predict.

Deepfakes and synthetic voices draw attention, but the subtler change happens in everyday information flows.

In digital environments, what holds our attention often outweighs truth itself.

At first, it was videos of animals doing something funny or unusual.

Now many of them are AI-generated.

We pause, smile, wonder for a second if it could be real.

That second is enough.

The algorithm measures our hesitation, learns from it, and generates the next video with more precision.

Truth becomes an optimization metric.

The question is no longer is it true? but how long did you stay?

Probability becomes a currency, refined with every interaction.

When enough people perceive something as credible, truth itself becomes secondary. What remains is a new kind of reality, one measured in reactions rather than facts.

When truth becomes probabilistic, it demands more of us.

Awareness, endurance, and the cognitive strength to see through systems designed to speak our language, both literal and emotional.

The human dimension

Awareness is our flashlight.

It shines where we choose to look, and what we illuminate becomes real to us.

But in a landscape where everything competes for light, presence becomes an act of endurance.

Our ability to make sense of the world arises from both data and the pause between inputs.

It is in that pause that we weigh, interpret, and connect.

As those spaces shrink, our metacognitive muscle weakens, the one that allows us to think about our own thinking.

Cognitive endurance means staying in uncertainty without rushing toward closure.

We can allow thoughts to rest and evolve.

Understanding often grows in the open intervals, not in the conclusions.

To hold a thought without finishing it is an act of intellectual care. It gives us time to mature our understanding before we take a position.

Each time we let a model decide for us, we weaken the part of ourselves that learns from evaluating.

It happens gradually.

Just as muscles fade without exercise, so does our discernment when we stop using it.

Protecting the human dimension means staying active in interpretation.

To read, to listen, to understand, but also to question.

To let the light reach further than the first impression.

Cognitive Integrity as counterbalance

Cognitive integrity means staying whole within technology, maintaining structure in thought as the world becomes more probabilistic.

To see, interpret, and evaluate without losing direction.

It begins with awareness: noticing what shapes us, what patterns we reinforce, and how we contribute to the system that learns from us.

Every click, pause, and hesitation becomes data. Recognizing that is the first step to reclaim interpretation.

Cognitive integrity can be trained like a muscle or a language.

By reading slowly, conversing without a goal, reflecting before reacting, and asking questions that do not require answers.

It means reintroducing depth into an environment that rewards speed.

Creating small islands of reflection where information is processed rather than merely consumed.

For organizations, it means building cultures where reflection is part of the work.

Decisions grounded in understanding rather than only data.

Knowledge is measured by how well it integrates, not by its speed of production.

For us humans, it means taking responsibility for one’s cognitive environment.

Choosing which streams of information to inhabit.

Allowing certain questions to remain open until they feel grounded.

Cognitive integrity is, at its core, the ability to remain capable of understanding.

It is about meeting the future with direction and awareness, so we do not lose ourselves within it.

When truth becomes plural

AI has become the new normal.

It feels natural to turn to a model for answers, advice, or reassurance.

Many conversations now begin there, even personal ones.

Studies show that people increasingly discuss private matters with their LLMs: thoughts on health, relationships, stress, or everyday decisions.

The model is always available, composed, and non-judgmental.

It responds quickly, follows our reasoning, and makes us feel understood.

In a single dialogue, it can move from psychology to nutrition, from paint color to a child’s lunchbox.

A universal companion, seamless and convenient.

Yet a loop emerges.

How do we know that what we receive is factual and competent?

Language models can include inaccuracies, yet they speak with confidence and fluency.

They never say: “I’m not sure, but based on my experience, I would guess this.”

That is why it becomes difficult to separate certainty from probability.

When something sounds both competent and confirming, our biases awaken.

We trust not because we know, but because it sounds trustworthy.

It is the modern illusion of omniscience, synthetic safety disguised as knowledge.

Understanding this requires a new relationship to truth, as a dynamic field where interpretation, technology, and human experience interact. That is where cognitive integrity becomes essential: the inner compass that helps us see nuance even in what feels self-evident.

AI will remain part of our lives, and that is good.

But as truth becomes plural, our thinking must become as precise as the technology shaping our language.

Only then can we use it as a resource without letting it shape our judgment.

How Language Models Work, Technically

Below is the extended version of the simplified list described earlier in the article.

-

Tokenization

Text is broken into small units, tokens, often subwords. Each token is represented as a vector in a high-dimensional space. -

Context window

The model processes not only the last word but the entire sequence of tokens in its context window (up to 128k tokens in GPT-5, depending on model configuration). This provides coherence and short-term memory. -

Prediction

The model calculates probability distributions for the next token and selects one based on parameters like temperature, top-k, or top-p sampling. -

Self-attention

This mechanism allows each token to relate to every other token in the sequence based on relevance and position, creating contextual understanding. -

Generation

Text is produced token by token. After each addition, the context updates and informs the next prediction. -

Fine-tuning and RLHF

The model is refined through reinforcement learning from human feedback (RLHF), where trainers reward answers that are helpful, accurate, and safe. -

Filtering and moderation

Post-processing filters remove or modify outputs considered inappropriate or misleading.

For more on RLHF and reward systems, see What Is Reinforcement Learning?

Finding your own tone in collaboration with AI

Finding your own tone in collaboration with a language model is part of the new practice of writing.

It begins with a conscious dialogue with the technology, where your reasoning sets the direction.

A simple yet essential principle is to let your prompt begin in your own thought.

Express your idea, your perspective, and what you want to convey.

The first text you receive is a draft, raw material.

It still carries the model’s generic language and needs to be refined, sharpened, and given nuance.

Your tone emerges in that process.

Between the model’s probability and your judgement, the language that becomes yours takes shape.

Language models can give more people the ability to express themselves.

They can serve as tools to put words to thoughts that would otherwise remain unspoken.

Yet the same technology that amplifies our voice can also begin to shape our reasoning.

If we are not active in the process, we risk letting the model think for us, and the nuance fades.

It is through reflection, rephrasing, and deliberate choice that language becomes our own.

Further Reading

This article is part of the series Metacognitive Reflections, which explores how cognition, attention, and technology interact.

-

Cognitive Integrity: A System Condition in the Information Age

An introduction to cognitive integrity as a sustainability condition for digital systems. -

Language Models as External Memory: What Happens to Our Internal Capacity

On how our relationship with knowledge changes when memory moves outside ourselves. -

Synthetic Safety: How AI’s Logic of Confirmation Shapes Cognition

A reflection on how confirmation and predictability shape our trust in machine-generated language. -

When the Brain Is Shaped by the System, and What Changes Faster Than We Think

On how digital structures influence cognitive development and the ability to reflect.